How Useful are Educational Questions Generated by Large Language Models?

Whether you work in the tech sphere or not, large language models have probably been dominating your feed recently. Since OpenAI’s release of ChatGPT, these models have been captivating the world. They are ripe with seemingly endless application possibilities; from acting as the new Google, to writing new novels or movie scripts, to generating educational content.

And yet, it is of critical importance that researchers who touch the domain of natural language processing (NLP) stop and thoroughly assess the usefulness and applicability of these models. Rigorously proving if models like ChatGPT work in practice, and isolating where they might fall short, is critical to responsibly directing the future of this research domain.

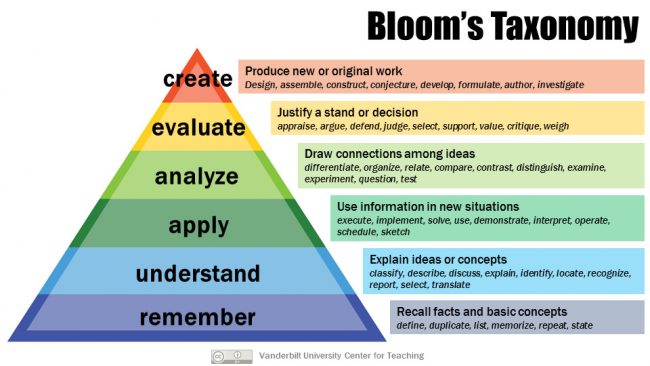

Our recent paper titled “How Useful are Educational Questions Generated by Large Language Models?” does just this for the intersection of NLP and education. Using controllable generation and few-shot learning, we generate diverse educational questions in all of the levels of Bloom’s taxonomy, and at varying difficulties, in a variety of domains. We then have a set of 25 teachers assess these questions to determine if - in their eyes - these questions are high quality and viable for use in the classroom.

Armstrong, P. (2010). Bloom’s Taxonomy. Vanderbilt University Center for Teaching. Retrieved [03/27/2023] from https://cft.vanderbilt.edu/guides-sub-pages/blooms-taxonomy/.

Armstrong, P. (2010). Bloom’s Taxonomy. Vanderbilt University Center for Teaching. Retrieved [03/27/2023] from https://cft.vanderbilt.edu/guides-sub-pages/blooms-taxonomy/.

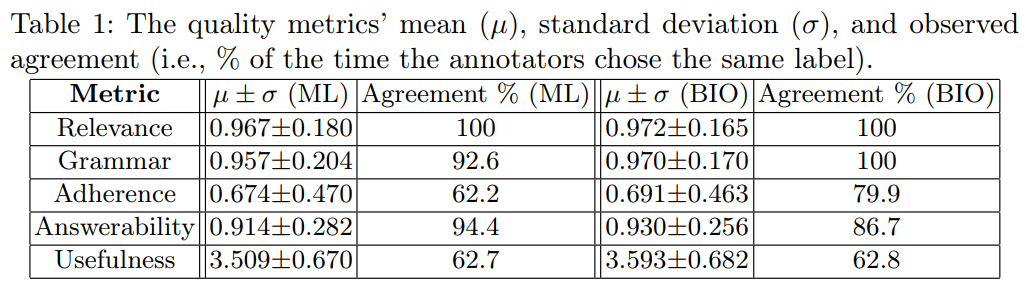

Overwhelmingly, the answer is yes! AI can generate questions that are highly useful for teaching. The generated questions are relevant to the topic 97% of the time, grammatically correct 96% of the time, and answerable from the inputs used to generate them 92% of the time. With this quality level, AI performs on a par with human teachers and in some cases may even provide better suggestions.

Furthermore, these questions have an average rating of 3.6 out of 4 on our defined ‘usefulness’ metric (see the paper for further details of this metric). The quality and usefulness of these automatically generated questions speaks volumes about how they will benefit teachers and students alike.

These results might seem apparent when considering all of the buzz surrounding the capabilities of large language models and their potential uses in the educational domain. However, there is little or no prior work that has shown a systematic, thorough evaluation of their abilities performed by real-world teachers who will actually be using the text generated by these models. These results demonstrate the potential of this technology and how large language models may change education for millions around the world.

Resources: